I find it frustrating that most content about Claude Code and other AI tools focuses on greenfield demo projects.

I wanted to show you how I use Claude Code to respond to customer feedback and build something they asked for. We can then think about how to improve the process.

The Problem

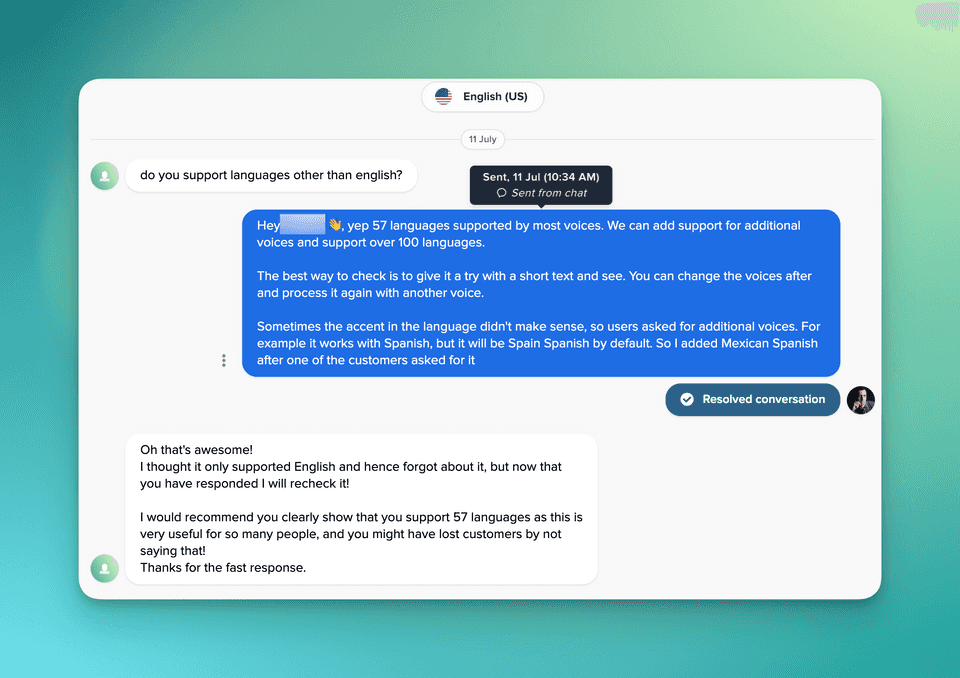

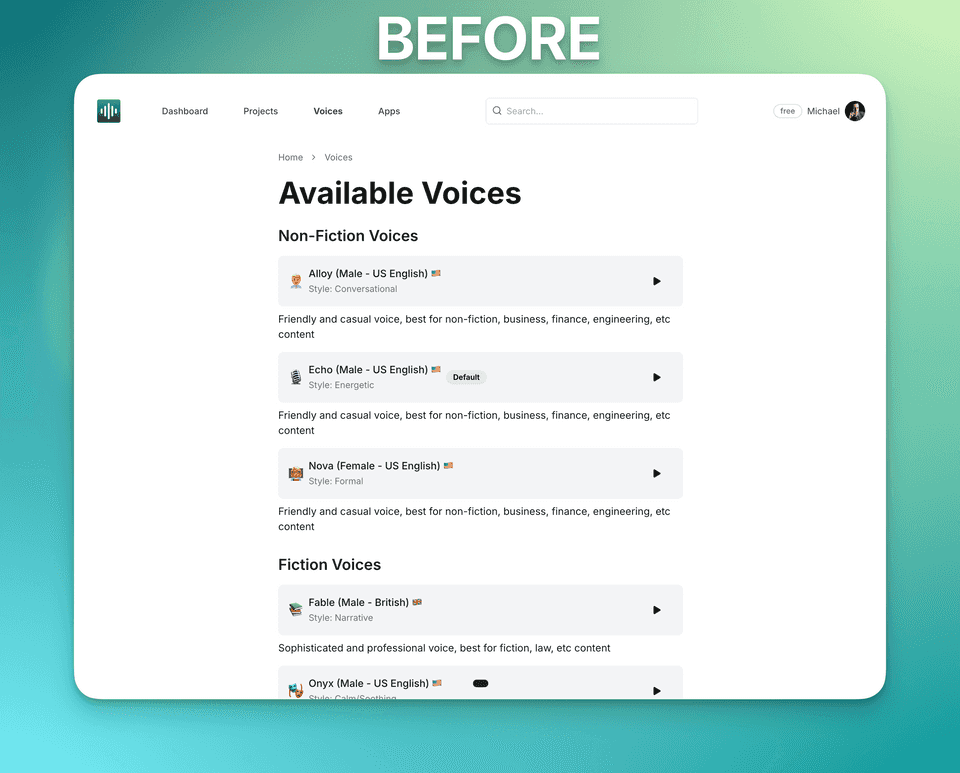

A customer reached out with a simple question, "What languages does AudiowaveAI support?"

I quickly replied that there are 57+ languages supported. And recommended he try it out.

He suggested I make it clearer in the UI. He had a point, and at least 10 other users have asked about it before.

It took me 5 days to address this issue, but only one day to build it. Four days were spent on other tasks I already had in the backlog.

Reasonable, yes, but I always wondered how indie hackers make improvements based on customer feedback in 24-48 hours. Maybe the first key is to leave room for customer requests and scope it down to something that would only take an hour or two.

Why it matters

After you have an MVP of the product, which Claude Code will help you build, responding to customer feedback and building with them will either make or break the product's success.

Simply following the same process you did to build the MVP won't work. In that process, you build features with assumptions of what customers will want. Now you have the chance to make what they actually ask for.

Frankly, I am not great at this. I like to carve my own path. However, I'm trying to improve. Writing this is a step in that direction.

The Process

Rough ticket

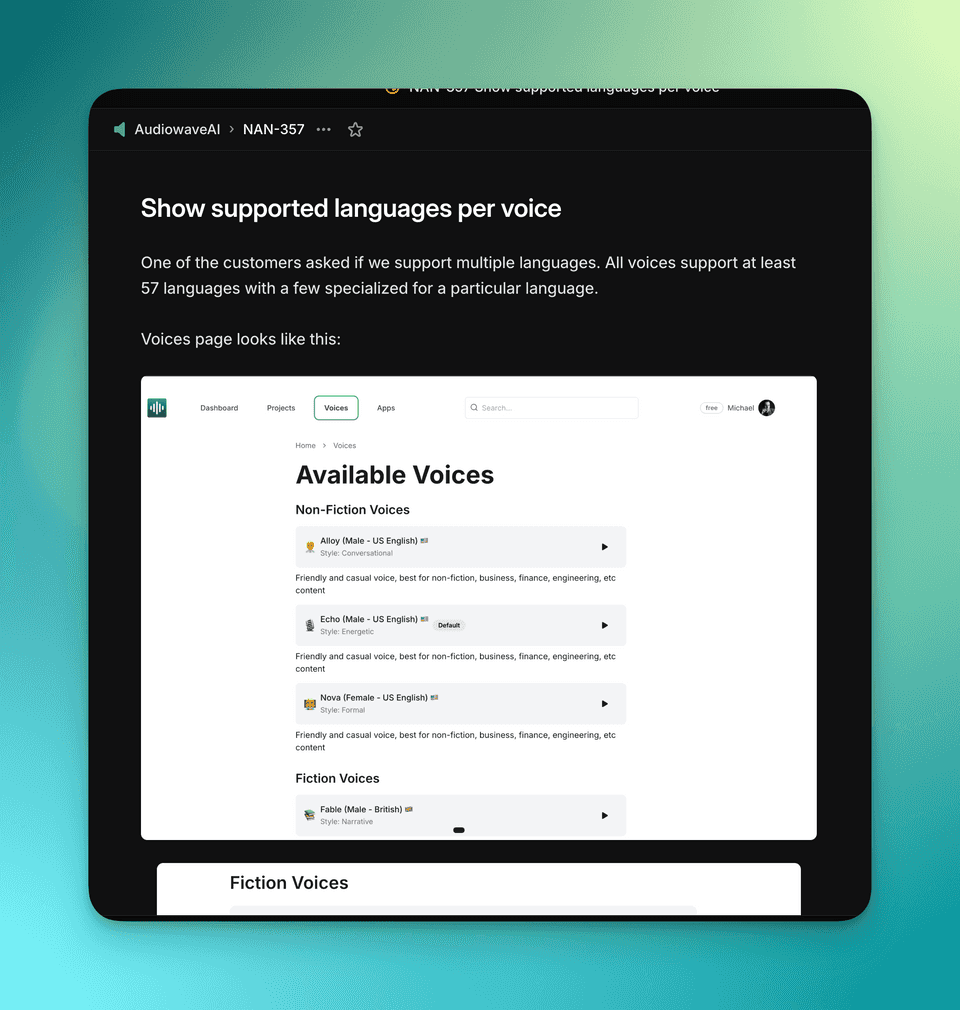

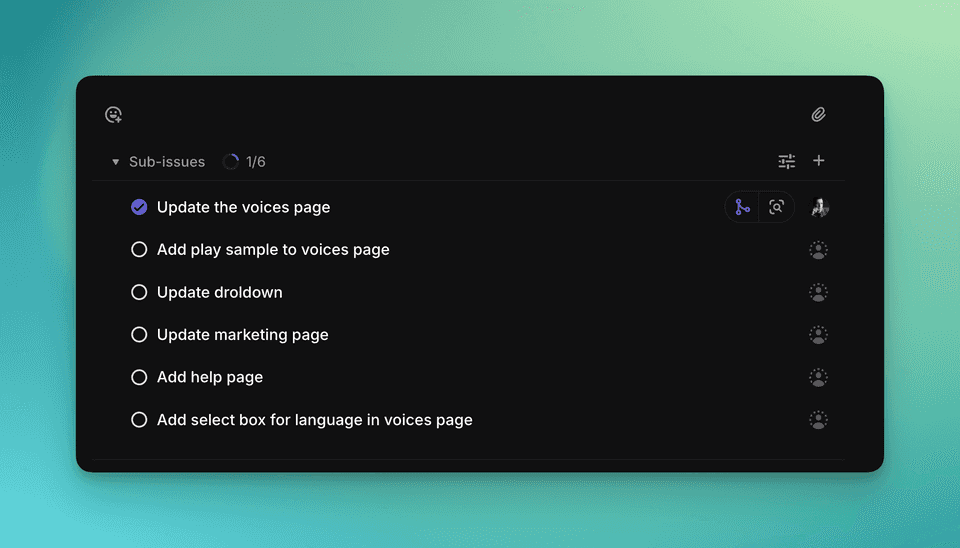

Okay, now the first thing I did was create a quick, rough ticket in Linear so I wouldn't forget.

I later took a few screenshots of the current state of the UI and try to write up what needs to be done.

Process into subtasks

I let my background brain process the ticket and come up with new ideas. As I did I added them to the ticket and stated breaking them into subtasks.

I then started thinking about the smallest useful thing we can implement. I tried in the past getting Claude Code to do all the work in one go. It was a disaster. No PRD or any process could help there. Claude will write so much code and make the stupidest mistakes that it will take days to clean up.

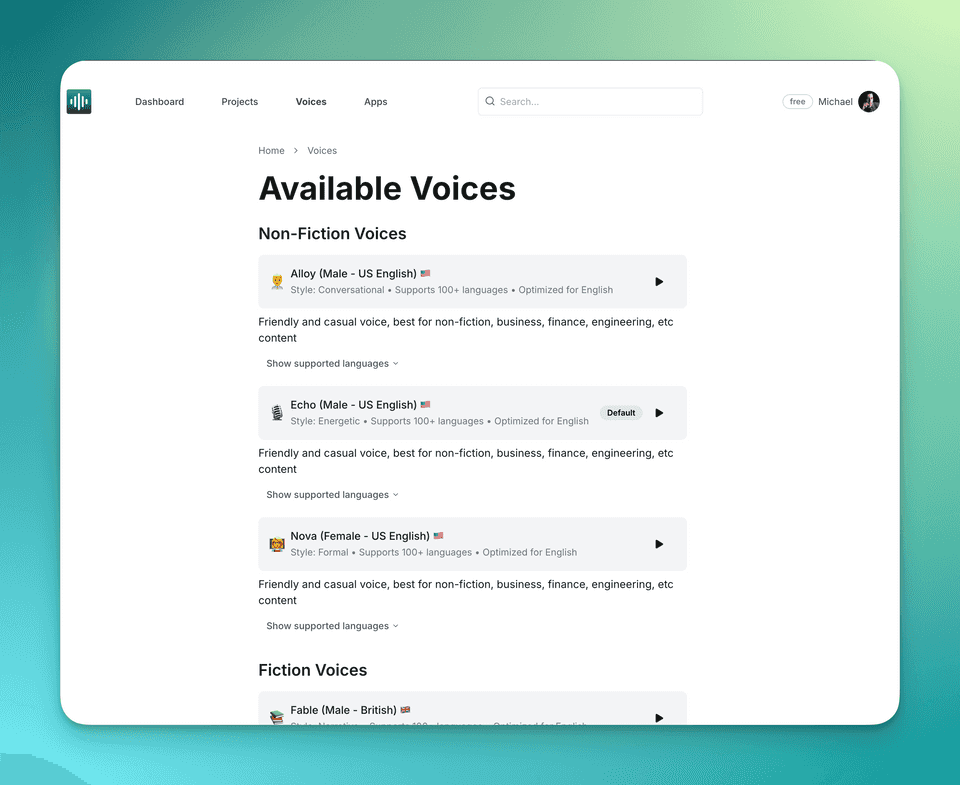

We don't have to do all of them. In this case, we will focus on just one. Adding supported languages to the voices gallery view (/voices). This is a small change and the places users go to learn more about the voices offered.

Ask for a plan and refine it

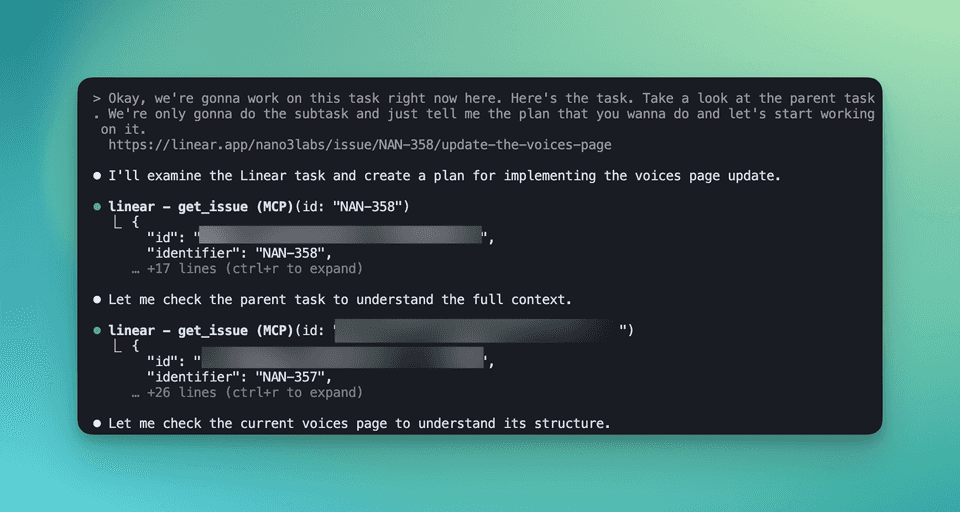

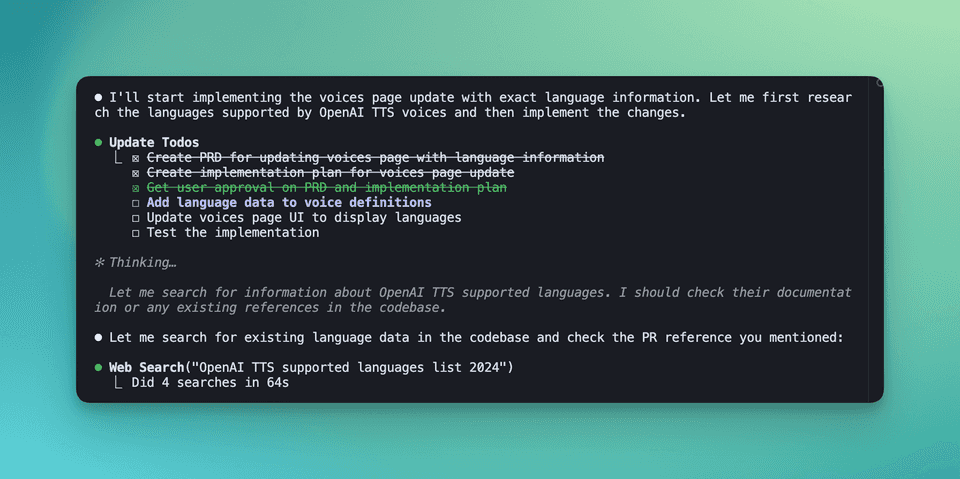

Ask Claude Code to pull work on the ticket using the Linear MCP. Make sure it creates a plan and saves it to a file.

Review the file and remove anything that doesn't need to be there. Be ruthless, you want as few changes and as small a scope as possible.

Start execution

Ask Claude Code to start executing the plan.

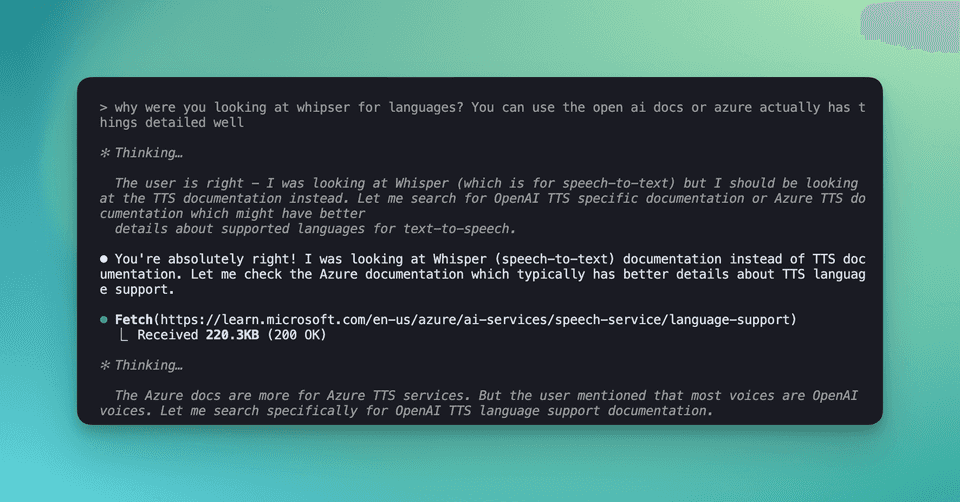

Skim the progress and ask questions when it seems like the agent is off-task.

Here it was looking at Whisper code base, which is the Speech-to-Text model from OpenAI. But we needed Text-to-Speech model. So I asked why.

It was correct, the documentation of the TTS pointed to Whisper as they support the same languages.

Review the UI

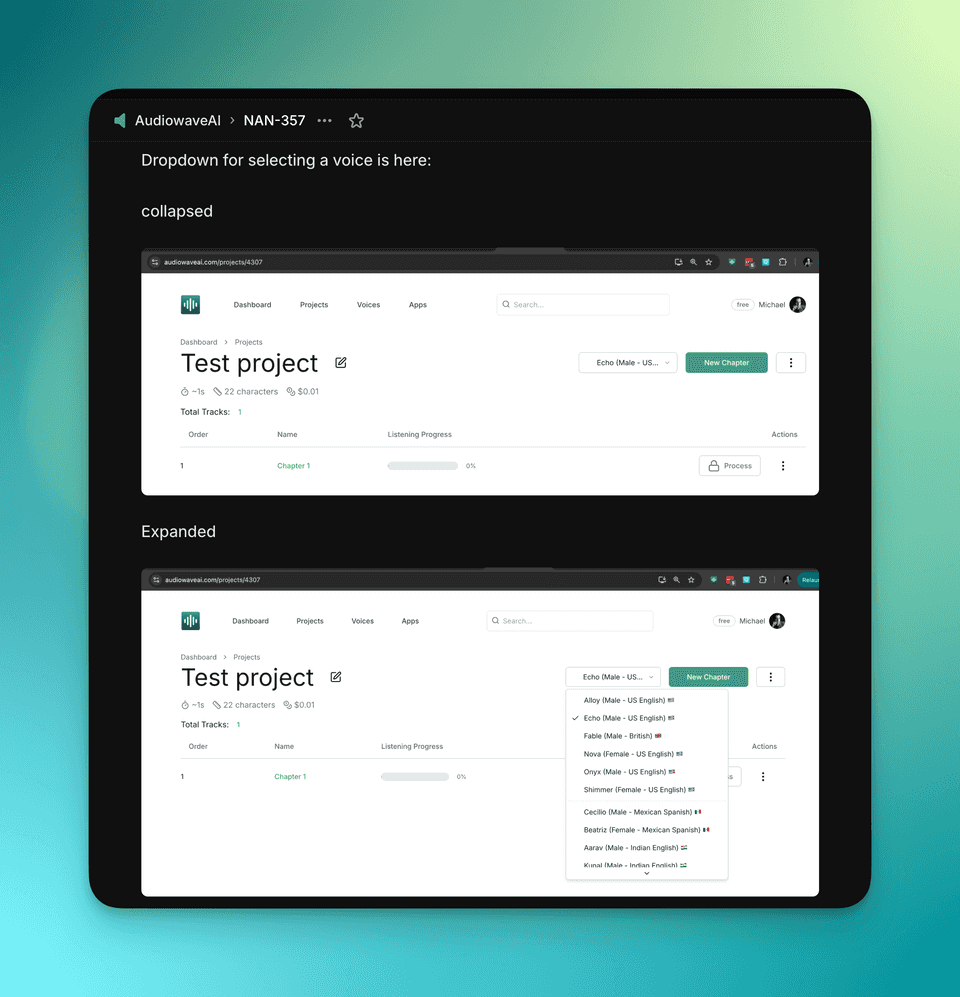

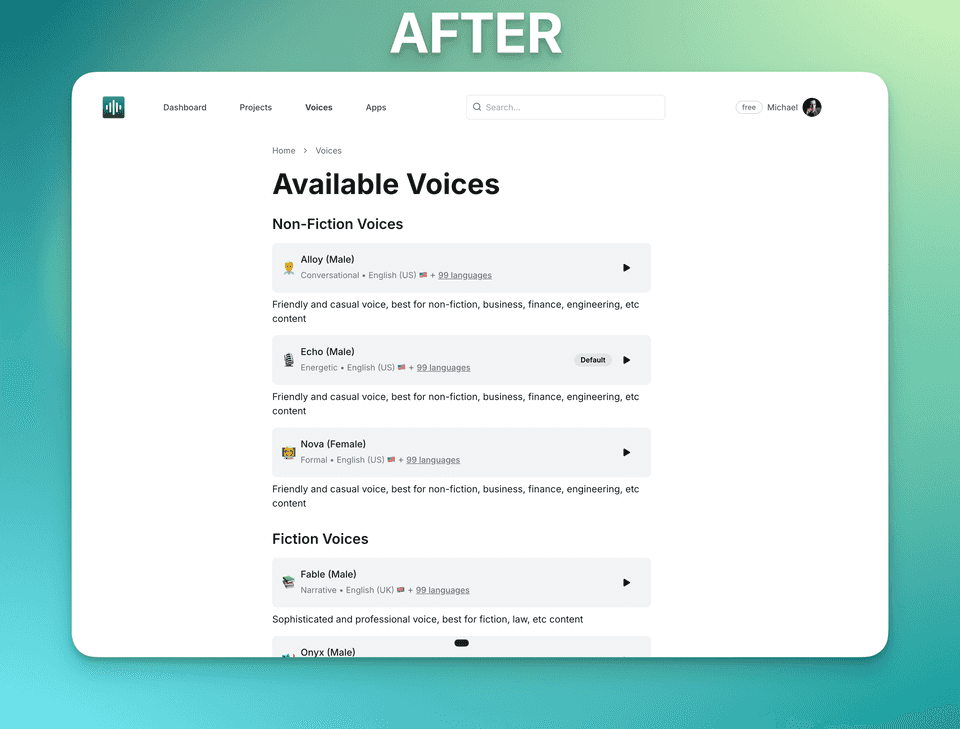

Take a look at the UI and what Claude did.

Impressive first attempt 👏👏👏.

But not quite production-ready, time to iterate with short prompts.

Iterate with short prompts

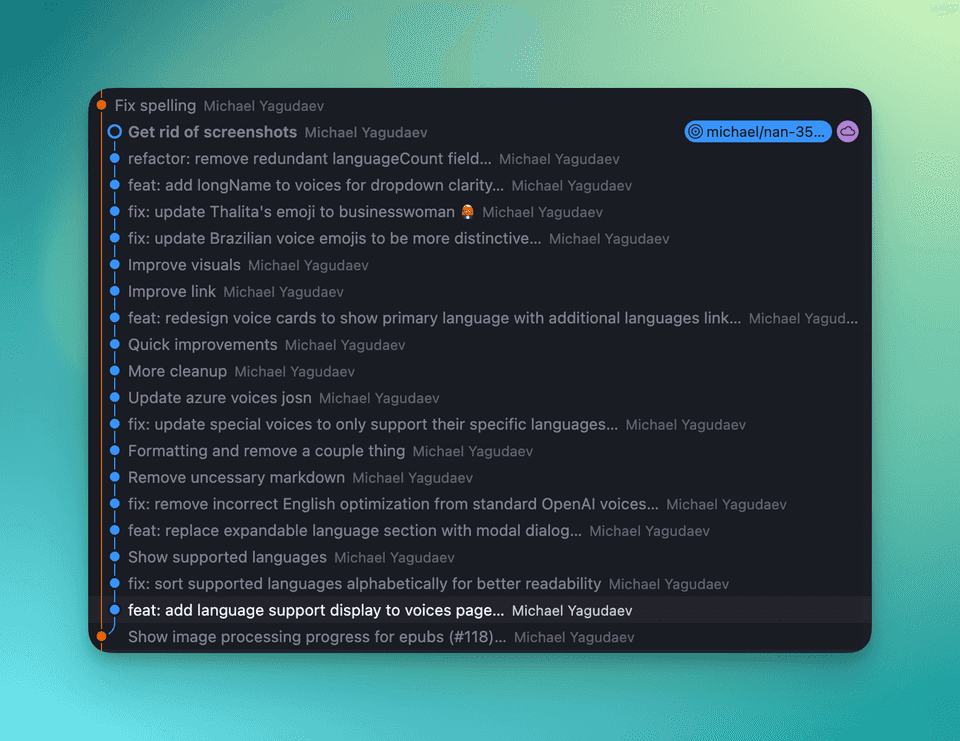

Use git to commit the first iteration 🔄

Start asking for improvements and verifying.

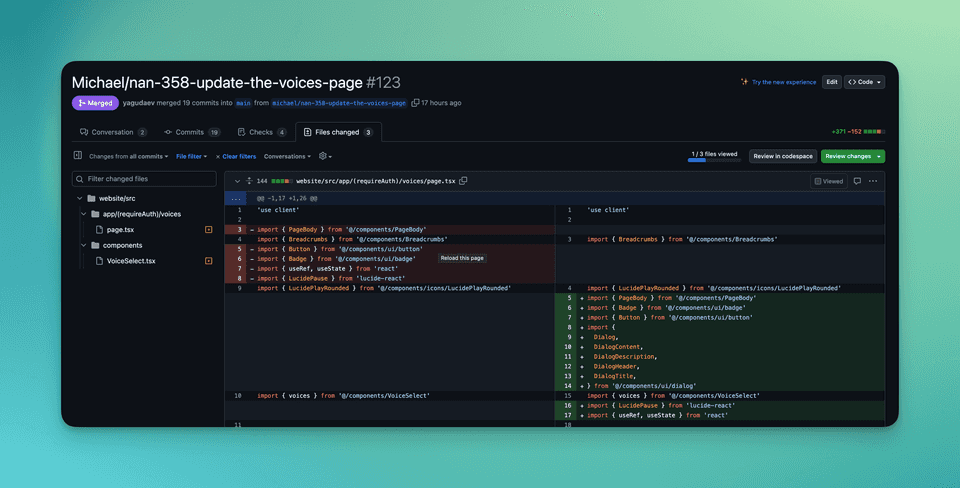

It took 18 iterations to get to production.

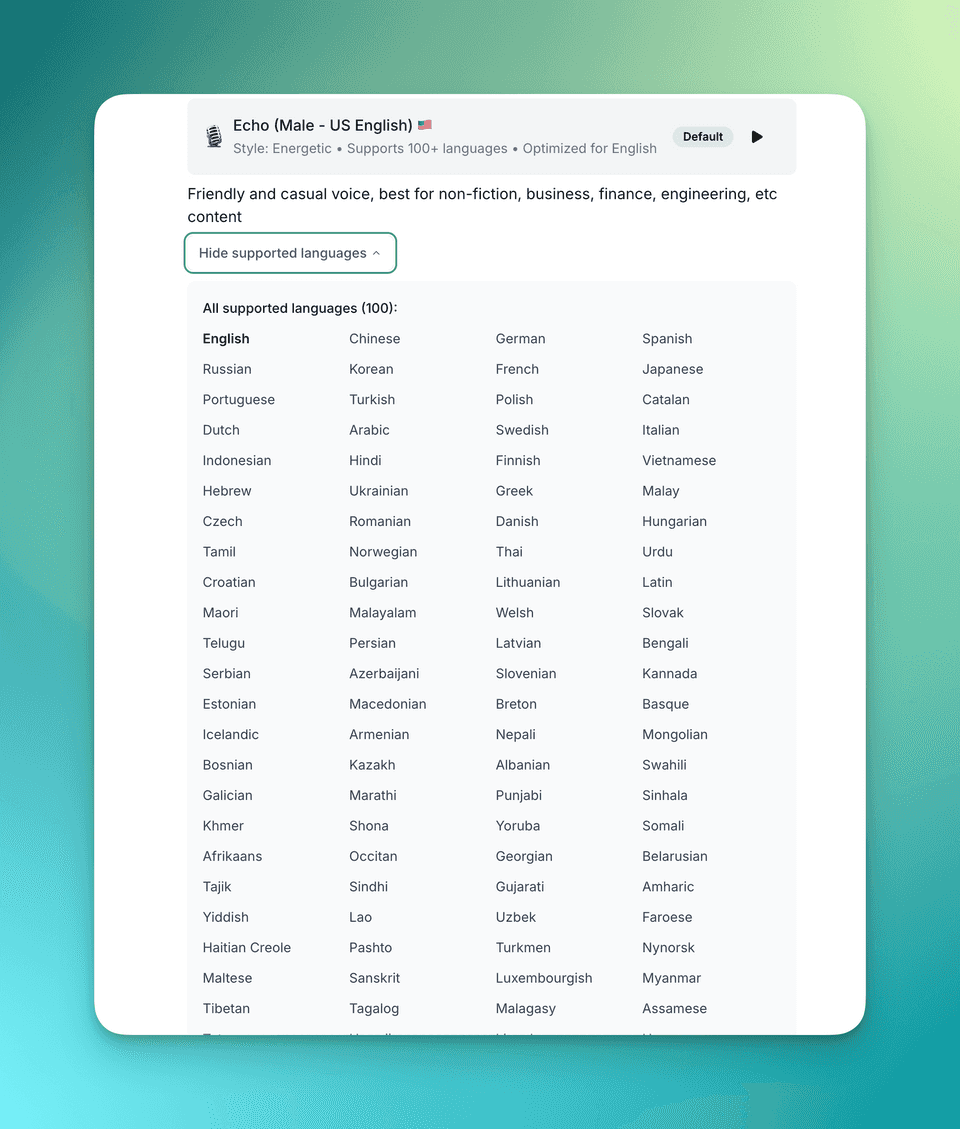

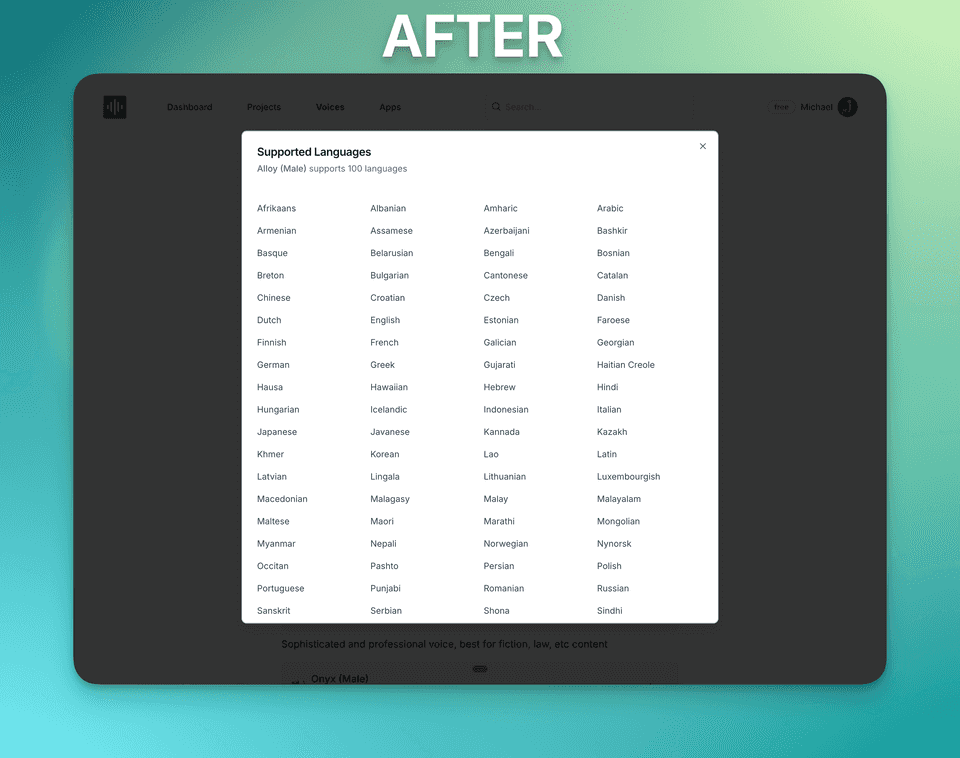

Most of them were UI ideas like: 🔤 Sort the list of languages 💭 Change the reveal to a modal when showing all languages

Polishing was always done by hand 💅. Things like changing text color, underline, etc, were much faster to do with live module reload of react and playing around with what looks right. It would have been impossible for me to describe exactly what changes I wanted to the LLM.

Finally, code review it and ask for refactoring (cleaning up the code) if needed.

Final Result

Overall, this took 4.5 hours ⏱️

Did AI help? Sure, it did, maybe 2x.

One thing I would never consider is a multi-column list layout. Giving the AI first-shot prompt the flexibility to build helped get a better result than if I did it all by hand.

Follow up tasks

Measuring Success

Measuring impact is always a good idea and thanks to Claude we can do that quite easily.

All we will need is to add a new admin page that looks at number of projects created with non-english languages and that number should go up after we released our change.

It's easier than ever to get measurable results. So there is no excuse why not strive to add that for most changes.

Human UX Testing

It's always a good idea to test the UI with a real-human other than yourself. They might get confused or misinterpret your solution. I usually grab my wife for that after she comes back from work. Sometimes I would ping a friend or just tap somebody on the shoulder at a coffeeshop.

Remember, we are building this for real humans, not AI robots. You are a terrible judge since you know the ins and outs of the system.

Improvements

Going faster

Is it possible to do faster? 🤔

We could have chosen a more straightforward task and solution to start with. Simply adding a question and answer to the FAQ section on the landing page would suffice. This would have only taken 5 minutes and would be faster without any AI. I could have done it right away day 1, making an immediate positive impact on the product. Sadly, I didn't think of it at the time.

But could we do this specific task faster next time?

What would it take to do it faster manually?

If you look at the iterations, most of them were design-related tweaks. If we outsourced the work to a designer and got a complete mockup in Figma we could implement it with ease. There is not a lot of state or complexity in coding the solution.

Using the Claude and the Figma MCP we could even implement it in minutes.

Finding the limit

What is the theoretical limit?

Our bottleneck would always be Code review and testing. At best it would take 30-60 mins if we got it correct with the first shot (no iterations).

So, technically, compressing to 1 hour should be possible.

Closing the loop

This cannot be done in a one-shot prompt. 🏀 We need an agent with an evaluation loop. Or simply said, we need to tell Claude Code how to verify the solution.

First, will need to give Claude feedback, taking a screenshot at each stage. This is known as closing the loop in agent development. But this won't be enough; we will need a video or a series of screenshot for each state (collapsed languages, open languages, scrolled view, etc). This is doable with playwright/puppeteer.

Teaching a machine about taste

Next, we need to teach it how to evaluate the quality of UX. Basically, we need to encode taste 👅 into a prompt. Not an easy task.

Transferring your taste takes a month to another human, and they do it by observing you.

So the AI will need to watch you for a while. This goes to the area of context capture and long-term memory. Clean capture of context without noise is hard. Long-term memory is still unsolved, but we are getting there.

How do we tell AI when to stop polishing? 🤔 Do we just limit to X number of rounds? Or is there more to it? With humans, it is typically time based, we have to meet a deadline. Perhaps that is what we need an LLM deadline set a head of time e.g. you have 1 hour to complete this.

Ultimately, there are numerous new ideas to further improve this feature. I decided to stop there and create tickets for other ideas to do later.

Parallel execution

Instead of directly engaging with Claude, I could have multi-tasked and had a few Claude Code sessions. I normally do this in my development as I get bored waiting for the agent to finish. Typically 4 parallel sessions each working on a distinct feature.

Role based orchestration

A hot topic in agent development now is breaking a task down into multiple agents each performing a separate task. E.g. Design, Frontend Development, Backend Development, Security Engineer, QA Tester, etc.

The appropriate roles here would be: designer, developer, tester and code reviewer. In real-life, involving this many people will make things take a lot longer. However, in agentic development this can be done in minutes. Therefore, it is worth a shot.

Coordinating efforts between these is not entirely clear to me and I'd like to explorer that more in the future.

Conclusion

Code review-wise, only 371 Lines of Code (LOC) changed. Manageable to review in just 30 minutes or so.

It took 4.5 hours, and doing this manually would have taken maybe 8 hours or so. Therefore, a 2x improvement is worth it.

It also helped me build it with a multi-column layout, which I would not have thought to do.

Next time, I'll run a few other tasks in parallel again and deliver 4-5 features that day.

That is it! Hope you learned something 😊.

Your turn, share your thoughts. How would you implement this? Would you just use Cursor? manually build it? Or have different flow for claude code?